Wekinator – Gesture Control for Performance with Machine Learning

Recording of February 7th in English Language (1hr 30mins)

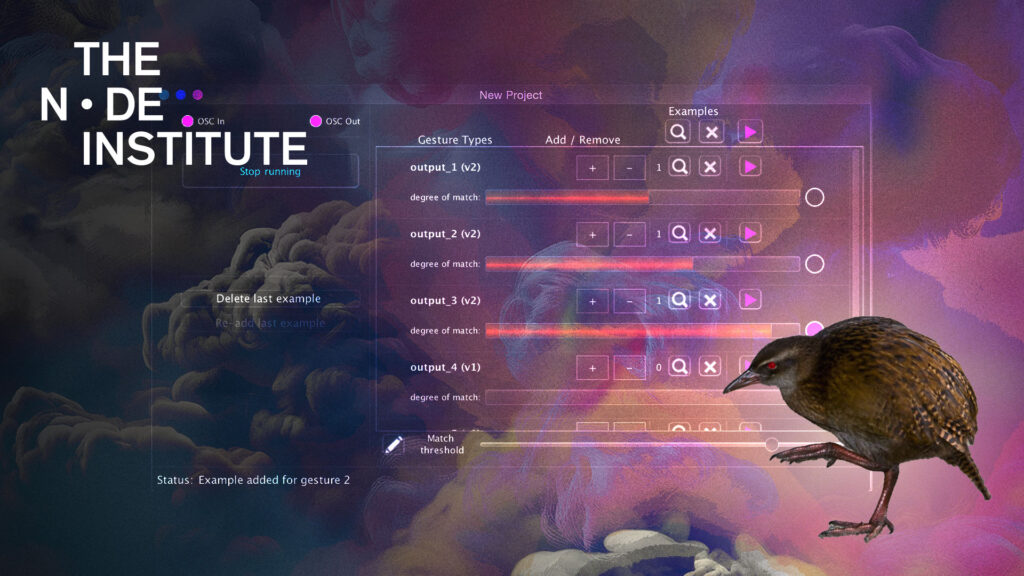

About Wekinator

The Wekinator is free, open source software created in 2009 by Rebecca Fiebrink with the ability to connect easily to dozens of other creative coding tools and sensors. It allows anyone to use machine learning to build new musical instruments, gestural game controllers, computer vision or computer listening systems, and more. The Wekinator allows users to build new interactive systems by recognising human actions without writing programming code:

Create mappings between gesture and computer sounds or control a drum machine using your webcam, play Ableton using a Kinect or use facial expression to change the modd of the lighting.

Control interactive visual environments created in TouchDesigner, vvvv, Processing or game engines like Unity and Unreal Engine using gestures sensed from webcam, Kinect, Arduino and many other input devices. Build classifiers to detect which gesture a user is performing. Use the identified gesture to control the computer or to inform the user how they are doing.

Detect instrument, genre, pitch, rhythm and more of audio coming into the microphone, and use this to control computer audio, visuals or data visualisations. Anything that can output OSC can be used as a controller and anything that can be controlled by OSC can be controlled by Wekinator.

Learn more about The Wekinator here

About this course

Learn how to leverage Wekinator within your own createive projects to derive simple control data from complex human interactions. Understand how to integrate various data sources, whether it’s sensors, cameras, microphones or music to regognise patterns and reduce complex actions to simple commands without expert knowledge in machine learning or openCV.

The first part of the course will give you an understanding of the use cases of Wekinator, how it works and how to integrate diverse inputs and train machine learning algorithms to recognise human gestures, facial expressions or sounds and send the results to any software that understands OSC.

In the second part we will look at a practical yet simple example to understand how to use Wekinator alongside vvvv, TouchDesigner and other programs to add intuitive, humand centered interaction to your projects.

What You’ll Get

- Access to all course materials and the recordings for one year after purchase

- Downloadable resources, including project examples for vvvv and TouchDesigner

- Access to a private community forum for networking, feedback, and support.

Requirements

This module is made for participants, who have some basic experience with creative software environments like TouchDesigner, vvvv, Notch, Cables, Processing, OpenFramworks, Max/Jitter e.a.

or show creation tools like Resolume, Ableton, Bitwig, GrandMA e.a. so they understand the context in which Wekinator can simplify interactions.

- A recent PC or MAC Computer with stable internet connection

- Zoom Client installed (please test your audio setup beforehand)

- 3-Button Mouse recommended

About the Instructor

Sebastien Escudié is a French creative coder and back-end developer who has done many interactive vvvv installations. Seb is contributing to the development of VL.Elementa, a GUI library for the VL programming language, as well as wrapping many libraries for VVVV Gamma, as you can see on his github profile. Also he has been wrapping many REST APIs, both for libraries and apps (GammaLauncher, VL.OpenWeather…) and commercial projects.

Pricing

Student – One Sessions a 65 EUR = 65 EUR

Regular – One Sessions a 100 EUR = 100 EUR

Company – One Sessions a 200 EUR = 200 EUR

These prices include German VAT (19% ).

Depending on your country of residence you may have to pay a different or no VAT.

You will see your individual price on check out.