UVW Coordinates

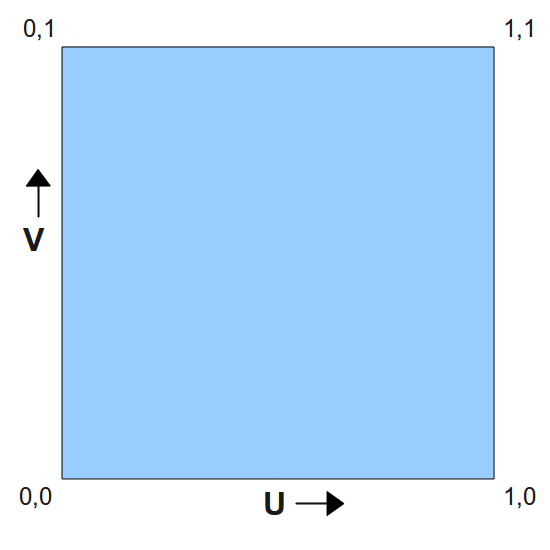

Imagine unfolding a 3D model (like a cube or sphere) into a flat 2D pattern. UV coordinates are like the coordinates of each point on that unfolded pattern, allowing you to map a 2D texture onto the 3D model.

UV coordinates are two-dimensional texture coordinates used to map 2D textures onto 3D models. They define the position of a vertex on the 2D texture, allowing you to specify which part of the texture should be applied to which part of the 3D model.

UV coordinates are normalized to a range of 0.0 to 1.0, where (0, 0) is the bottom-left corner of the texture and (1, 1) is the top-right corner.

Each vertex on the 3D model has associated UV coordinates. These coordinates determine which pixel on the 2D texture will be applied to that specific vertex.

UV coordinates can also be used for other purposes like vertex colors, controlling surface flow, and other effects.

TIP: By using a Point SOP, you can swap the position and the texture coordinates. This allows you to model the “texture space”. Another Point SOP allows you to swap the position and texture back to their original locations.

Bit Depth

In the context of Computer-Generated Imagery (CGI), bit depth refers to the number of bits used to represent the color and brightness information for each pixel in an image or video. Essentially, it determines the range of possible colors and shades that can be accurately captured, processed, and displayed.

Bits and Color:

A bit is a binary digit, either 0 or 1. With each additional bit, the number of possible values (and thus colors/shades) doubles.

8-bit per channel (24-bit total RGB):

This is often called “true color” and allows for 28=256 shades per color channel. For RGB, this totals 2563≈16.7 million colors. While this is the standard for most consumer displays and web images, it has limitations for CGI:

- Banding: In smooth gradients (like a sky or a rendered surface with subtle lighting), you might see visible “steps” or bands of color instead of a continuous blend. This is because there aren’t enough discrete shades to represent the transition smoothly.

- Limited dynamic range: It struggles to accurately represent very bright or very dark areas of a scene, leading to loss of detail in highlights and shadows.

- Post-processing limitations: When performing color correction or visual effects, 8-bit images can quickly fall apart, showing artifacts as you manipulate the limited color information.

16-bit per channel (48-bit total RGB):

This provides 216=65,536 shades per channel, leading to over 281 trillion colors. This is a common bit depth for professional CGI workflows, especially when working with:

- High Dynamic Range (HDR): 16-bit float (half-float) formats like OpenEXR are widely used. These can store values brighter than “white” and darker than “black,” preserving a massive amount of lighting information from the render. This is crucial for realistic lighting and compositing.

- Smooth gradients and complex lighting: The increased precision allows for incredibly smooth transitions and accurate representation of subtle lighting variations.

32-bit per channel (96-bit total RGB):

Often represented as “32-bit float,” this offers an extremely vast range and precision. While not always used for the final rendered image, it’s frequently used internally for calculations. This maximum precision prevents accumulation of errors during complex operations and helps maintain the integrity of data.

Why Bit Depth Matters in TouchDesigner

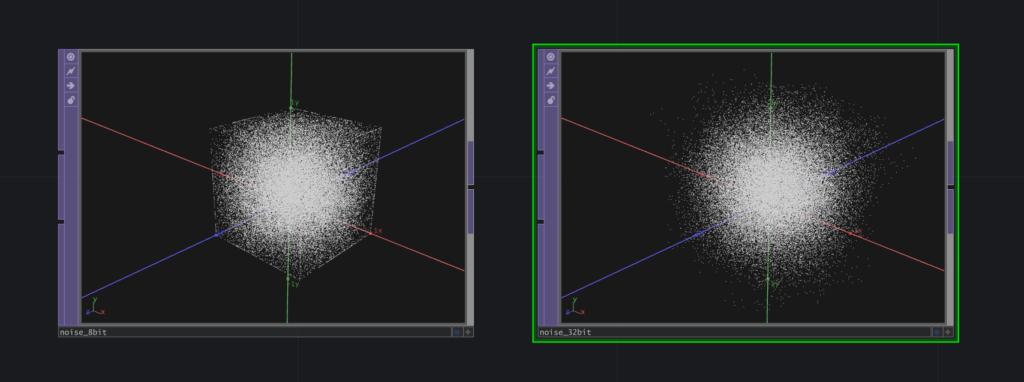

All of the above (regarding bit depth and its advantages) applies to rendering and compositing in TouchDesigner as well. However, its importance becomes particularly pronounced when you need to store and process large amounts of diverse data for parallel computation, such as when reading and writing particle and point cloud attributes (like positions, velocities, colors, normals, etc.).

While an 8-bit per channel (e.g., 24-bit total) integer color space is inherently limited, typically storing values from 0 to 255. When trying to represent 3D positions with this, you often normalize these values to a limited range, like a [0,1] cube. This constraint can lead to significant precision loss and quantization artifacts for high-resolution simulations or large scenes, as there are only 256 distinct values per axis.

In contrast, a 32-bit floating-point (float) data type offers a vastly larger range and significantly higher precision. This makes it indispensable for particle and point cloud animation and simulation because:

- Positions: It allows particles to exist anywhere in a virtually unbounded 3D space with extremely fine precision, preventing “snapping” to a grid or values exceeding the representable range.

- Velocities and Accelerations: These values can span a wide range and often require fractional precision for smooth motion.

- Other Attributes: Normals, custom data, or simulation parameters can be stored with the necessary accuracy without being clamped or losing detail.

This high precision ensures that simulations remain stable, accurate, and free from visual artifacts, even with millions of particles and complex interactions.

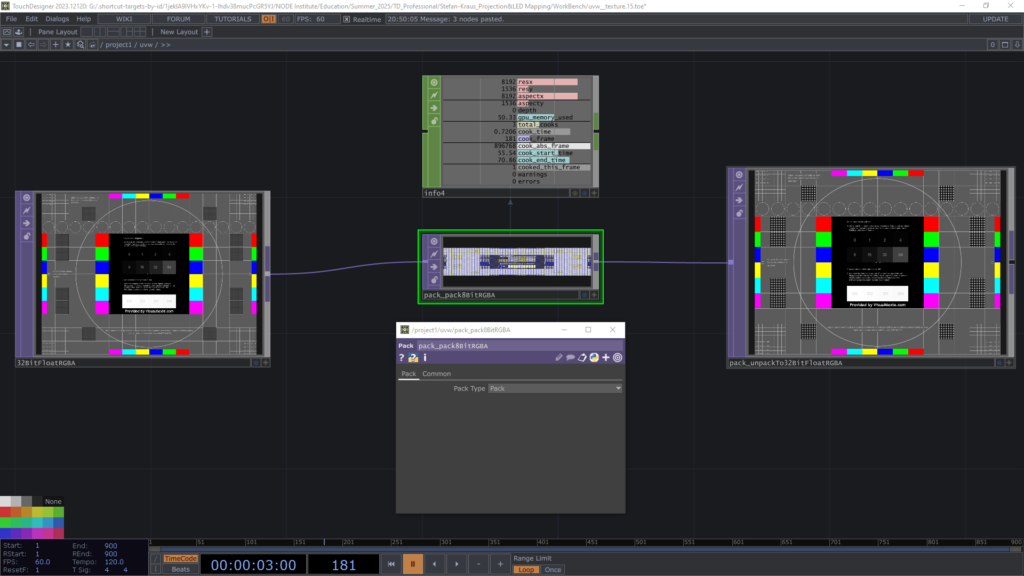

Pack Up and Send

packTOP is used to put 32-bit RGBA floating point images into quadruple-wide 8-bit fixed point image to make them more transportable through lossless file formats that are just 8-bit, or 8-bit protocols like Spout/Siphon. Then the packTOP can unpack on the other side.